Points to and shakes the robot’s hand

Intimacy as situated interactional maintenance of humanoid technology

The German version of this text was published in Zeitschrift für Medienwissenschaft, No. 15, 2016. Deutschsprachige Version dieses Beitrags hier.

British comedian Eddie Izzard is on the Hammersmith Apollo stage as part of his 1997 piece Glorious. Izzard performs his «love and hate relationship with technology.»1 To expresses his «techno joy», he contrasts «everyone on film» who is «so swish, so smooth … so expert on that computer» with somebody rendered «in a realistic way.» That somebody, performed by Izzard, tries to connect a computer with a printer. The sequence starts when Izzard mimes the act of placing the two pieces of technology – the computer and the printer – in adjacent locations. His next move is to type «Control, P, Print» into the computer. In response to this command, the computer displays «Cannot access printer» reply. The actor then turns toward the printer, and, with a puzzled expression, uses both hands to point toward it while he exclaims: «it’s here.» A pronounced laughter emanates from the audience. Izzard looks back toward the computer, turns again toward the printer, and repeats the pointing gesture. The audience’s laughing and clapping increases. Izzard then picks up the computer and turns its screen toward the printer’s location, as if allowing the computer to look at the printer. Another wave of laughter arises from the theatre.

The scene is comical (or, we understand why it is comical to those who find it comical) as it is easy to identify with the character who, frustrated with the computer, cannot understand why the technology doesn’t simply experience the world as we do. This is exactly where social robotics enters the scene. This academic but also a practically oriented endeavor is intended to address the gap that Izzard points to in the scene. The field dates back to the late ‘90s/early 2000s, and – located somewhere between cognitive science and engineering – centers its efforts on designing a robot meant to give an impression of sharing our embeddedness in the socio-material world.2 In other words, social roboticists are so invested in producing an ‹intuitive› machine that they go as far as to envision it as sharing our bodily as well as social characteristics. These are computational machines that, in Izzard’s example, would, just like us, notice the printer in the adjacent location, while also responding to the pointing gesture and verbal indication that the printer «it’s here.» In virtue of their design, social robots would, thus, make our interaction with them straightforward and possibly trouble-free.

I encountered social robots as part of my ethnographic study of robotics laboratories. Despite being envisioned with such intentions, in all my encounters with these machines, dealing with them did not appear to be «so swish, so smooth». Grounding my project in the field of Science and Technology Studies (STS), and engaging with the problem of human-technology interaction from the perspective of laboratory studies,3 I observed actual production of the technology by «following» robotics engineers as they went about their everyday research activities.4 I noticed that ordinary encounters with social robots, unlike «everyone on film», are often either colored by frustration (similar to the case of the character played by Izzard), or they call for a situated interactional maintenance of the bodies at play. This second scenario is what I will here call ‹intimacy›. Intimacy, in this context, concerns the enactment of the robotic technology intended as ‹social›through an intertwining of its body with multimodal and multisensory elements of concerted action in specific situations of design and use.

Considering social robots through such a lens suggests a twist on – if not a reconfiguration of – the commonly assumed idea of self and agency. While the modern western concept of agency directs us toward individual interiority, here, the robot gains its own agency – as an alive and even social actor – when its designed body is situated in a web of multiparty moves in social robotics. This means that, rather than exclusively focusing on how agency is inscribed in the design of a humanoid technology,5 we are now attending to how agency is circumstantially distributed and managed through situational detail of practice. To concretely get at this phenomenon, I will describe how the maintenance of a social robot is accomplished in an everyday moment of interaction in social robotics. When conducting my ethnographic observations, I combined traditional note-taking with video-recording, as my aim was to explore the experiential dimension of the human-robot engagement. Here, I will work with a section from these recordings to get at multimodal and multisensory aspects (gesture and touch, for example) of the concerted action that develops through the scene we will focus on. Looking at those features to bring forth the role multiparty maintenance plays in the achievement of robot’s agency should not, however, be understood as a return to the dominance of the human designer. In other words, my intervention is neither about control nor some romantic idea of intimacy. It is, instead, about embeddedness in practical circumstances that call, unavoidably, for certain moves on the part of those participating in the scene.

But let me first briefly touch upon the first scenario that I mentioned, where humanoid technologies are treated with what appears to be frustration or even violence.

Gaze is Hate

For now, social robots, other than in science fiction, largely live in research laboratories. And when we see them roam outside what are traditionally conceived as research spaces, these robots are accompanied by their designers (be it proximally or remotely). Their counterparts, however, are among us, and to encounter a robotic agent designed to communicate is nowadays not rare or exceptional, at least not among the middle-class western populace. There are talking cell-phones, supermarket self-service cashiers, ATM machines, electronic toys, cars and other computational agents that display readiness to greet us while providing information or just recounting their emotional states.6 But, when we come across those machines, the encounters often end up in frustration or even violence.

For the last ten years, I taught a class on communication and social machines. In that class, students were asked to find a chatterbot on the Internet – a computational agent programmed to simulate a conversation with a user – and analyze their interaction with it. Most students were not previously familiar with chatterbots, and as documented by the written transcripts of those conversations, their conduct, more often than not, was negatively colored. Students would often ask the bot questions about its birth and parents, religious creed, and what it was doing at that moment; and, if the bot replied in ways that did not align with social norms of conversation, the response on the part of the students was definitely not the one of accommodation or engagement. Instead, they treated the machinic interlocutor with derision and verbal violence. Rather than minimizing the incongruities (which would, presumably, allow for a continuation of conversation), students called the bot names and seemed eager to voice their unwillingness to put up with the conversation any longer.

This comportment toward humanoid technologies readily brings to mind Furby, another commercial product designed to communicatively engage with humans. A simple Youtube search will generate a number of samples where people not only display their unwillingness to engage in conversational routines with the toy, but where they enact delight in performing violence toward it, such as putting Furby on fire or burying it. And, as I write this, David Zwirner Gallery in New York City has on show Colored Sculpture by Jordan Wolfson which explores this linkage between humanoid robots and violence.7

In Wolfson’s installation, the object on display has visual features of a social robot, and in the article on ArtNews, «The Man-Machine: Jordan Wolfson on his Giant New Robot, Hung by Chains at Zwirner», Nate Freeman calls it a «robot» (also identified as « animatronics sculpture», «a figure supposed to represent Huck Finn», «a machine», «humanoid installation», etc.).8 From what a Google search allows me to gather, Wolfson’s robot is preponderantly organized and engaged around gaze, while the rest of the modalities are largely disregarded. First, the robot embeds facial recognition technology so that it can appear as exchanging gazes and following visitors around the gallery; I will return to this point later in this section. Second, the piece is about its distanced viewing on the part of its human visitors, mainly conceived as a spectacle. The centrality of this intense gazing is illustrated by the image that announced the show and is presently featured on the gallery’s website9, here reproduced in Figure 1. While the majority of the images of Wolfson’s robot do not show humans, this image, as the ArtNews article explains, is of Wolfson taking a photo on his phone of the robot’s head. By the position of the phone that displays an image of the robot’s painful grimace with the artist’s hand gesturing over (or possibly pressing upon) it, the robot is for others to see. While the act of touching the robot may be there to indicate the artist’s relationship with it, the image does not appear to be about that relationship. Rather, the robot is presented as something that those who will see the photo, taken by the artist, can look at (but not engage otherwise).

Figure 1: Wolfson photographing his Colored Scultpure.

Photo: Joshua White, JWPictures.com

This seems also to be the case of Wolfson’s older piece Female Figure (2014), an animatronics sculpture figured as a robotic striptease dancer wearing a witch mask. That sculpture too comes equipped with facial recognition technology, but this time, as Figure 2 exemplifies, the robot is installed to face its mirrored image. The artist explains of his envisioning of the sculpture as follows:

«Intuitively, almost immediately, I imagined that there would be a mirror the animatronic looks through, creating something that functions as a camera frame for the viewer, so it would be like looking at her image in cinema or off your iPhone or whatever, and it would create that same bridge for the audience.»10

Again, most photos of Female Figure searchable on the Internet do not frame any humans with the robot; but those that do (one of which is reproduced in Figure 2) show gallery goers in a position of the robot’s onlookers or photographers. For both installations, it seems safe to say that, while the robot’s relationship with the viewer is one of detachment, the scene is organized to make the viewer feel uncomfortable, and possibly disgust. Those emotions have to do with staging of violence that the installations are organized around.

Figure 2: Wolfson’s Female Figure with two gallery goers looking at and seen in the mirror. Photo: Ruth Fremson/The New York Times/Redux

Regarding his visit to Colored Sculpture installation at Zwirner, the critic at ArtNews reports as follows:

«I entered the gallery alone, as Wolfson said he wanted me to experience the work alone. In front of me was a gigantic steel outline of a cube with cylinders spinning and whirring to release metal chains that were holding up the larger-than-life figure, which had joints throughout its body to allow it to be contorted in harrowing, grotesque ways. The winches that held up the chains went left to right in various speeds, and the chains were coiled and released in different measures, allowing for the figure to get twisted into any number of permutations – it’s a precise dance that Wolfson programmed himself, to allow for viewers to watch the figure float within the space in a carefully calibrated cycle of movements: quick starts, long silences and placid moments, atonal spasms, swooping glides, and those punishing falls to the floor. It became more wrenching and powerful the more cycles I took in. After some slow movements and dramatic silences, the figure would get thrashed toward me, the chains making deafening noises and the figure’s head getting smashed again and again on the ground.11»

And when the critic, in his interview with Wolfson, asks the artist «what the work meant to him», he reports that:

«[...] he went into a zone, saying, ‹It’s a set of formal problem solving issues,› and ‹the artwork is a multifaceted device›, and ‹it does elegance›, and ‹there’s an indifference to the movement›, and ‹it’s real violence damaging the work.›

Well, even if the work isn’t actually being damaged when it gets dropped to the floor or whipped around – it’s built in a way that can withstand the hits – the violence is absolutely present.»12

But, if we now turn to one of those computer science laboratories – where actual social robots can be encountered as part of everyday life – the scene changes. While it is undeniable that frustration – as in any kind of work (or design work more specifically) – abounds and occasional performances of violence are staged,13 robots are most often attentively engaged. These laboratories are places where participants respond to the demand of ‹getting-things-done›, and social robots are the things that need to be done. This process of doing, however, is not only about production, or even making, fusing technology and handwork. What we encounter there is also about accommodation and patience directed toward sustaining the technology.

In keeping with the theme of the special issue, I will look at this phenomenon of technological maintenance through the lens of intimacy. Rather than understanding intimacy as being about a sympathetic emotion, the support provided and the entanglement between humans and technology in those settings is first of all about labor and effort.14 Lucy Suchman points out that «the laboratory robot’s life is inextricably infused with its inherited materialities and with the ongoing – or truncated – labours of its affiliated humans.»15 I will show how this takes place in the interactional and sensory realm, focusing on coordination of gesture, talk, body orientation, tactile engagement, spatial organization and arrangements of things and human bodies in laboratory work. Maintenance, thus, as intended here, has a temporal component – the robot, as a social agent, is done step-by-step in interaction. Nevertheless, this term should not be equated with preservation. Rather than referring to something that already exists prior to an actual act of interaction (and is, thus, preserved across time), maintenance here concerns the process through which sociality of the robot is realized and made possible.

I suppose that something not very dissimilar would also be available in the chatterbot and the robot-as-artwork case if we were to observe how those artifacts were engaged with during their making process. It is possible that we would even be able to catch this interactional maintenance of the technology if we were to look at how it is actually engaged within the classroom and gallery (rather than only considering its discursive framings, accompanying representational material and objects that remain as traces of those encounters). In the ArtNews piece, we size a glimpse of that when the artist asks the critic (who is now visiting the gallery during a busy opening) to move to a specific spot in the gallery so that the robot can appear looking at him. Reporting on how the artist approached him, the critic writes:

«‹I’m so glad you came back›, he said with a smile, the brutality of his work unfurling in front of him. ‹You have to stand here›, he told me, gesturing at a spot directly in the middle.

After a few minutes of staring at the figure, I locked eyes with it, the face distinctly registering pain and loneliness before collapsing to the floor, the chains lashing him about, pummeling him to the ground, his head getting smashed and smashed and smashed to the deafening sound of When a Man Loves a Woman.»16

When the visitor changes his location and stares at the robot for «a few minutes» so that the robot can appear to be gazing at him, an act of what I am calling intimacy takes place. The visitor, through his moving across the gallery space, gazing at the robot, and patient waiting, as directed by the artist, makes possible for the robot to establish a reciprocal gaze. The design of the technology makes the robot predisposed for it; but for the reciprocal gaze to take place on that occasion, the designed body needs to be brought in relation to the visitor’s doings, guided by the artist. The question, then, to ask is how that act of intersubjectivity is possible, and how the relationship with the other makes it possible in the first place. As the example from the gallery indicates, the robot gains its status of a self-standing creature that gazes in respect to what the artist (who was involved in the robot’s production) directs the visitor to do and what the visitor does. In other words, the reciprocal response between the visitor and the robot is relative to the semiotic work and other practical efforts that ground it in the situation of the encounter. Some of what renders Wolfson’s robot a gazing actor is accomplished before the interactional encounter in the gallery. But important features of it also concern circumstantial contingencies – what is shared in the intersubjective world of practice – during the interactional encounter: the visitor’s movement, gaze direction and patient waiting, and the artist guidance, for example. These doings allow the robot to be positioned as a figure that gazes back, where its gaze appears being directed from within. Noticing and acknowledging these aspects of effort and interactional engagement bring forth the primacy of relation – where an individual exists as a unit only in respect to the encounter with the other. The aim here, however, is to identify that relationality as a ground for possible acts of intersubjectivity (such as the reciprocal gazing), and to, specifically, draw attention to concrete, material and social elements involved in that relationality.

The encounter described in the Artnews piece is unusual compared to the main framing narrative of Wolfson's sculpture, which leaves situated interactional maintenance largely invisible. The sculpture, as mentioned earlier, is about visitors’ gazes. The robot is not engaged otherwise than to be looked at as a self-standing whole (an other to be objectified), then photographed and further visualized on the Internet. Similarly, the gaze of the robot, while involved with the visitors’ gazes, is fashioned as a gaze coming from another independent I, directed from within. In other words, both gazing poles are framed as autonomous units beyond any circumstantial contingencies of the encounter: there is one who looks at the other, who then returns the gaze. The relationship is, then, one of ‹distance› (rather than ‹intimacy›17), where the two poles are possibly mirrored (the robot is a mechanical challenge to the human uniqueness), but not engaged beyond the distant gaze. In the sculpture, furthermore, this uncomfortable distance is heightened (where the human is challenged by a mechanical replica, while kept at a distance from it) to the point of turning it into a spectacle of violence.

By considering events in the laboratory, on the other hand, I will attend to exactly what is absent but makes the violence in Wolfson’s sculpture possible: actual practical effort involved in the production of technology, as well as the step-by-step, multimodal and multisensory interactional moves that partake in the robot’s enactments of agency. I will trace how this kind of ‹intimacy› ranges from the minute adjustments to designed features (like the doings of the gallery goer) to more substantive responses to glitches, and unpredicted struggles in engagement with technology. With the latter, I will indicate how the robot is made a social-like actor through the involvement with those who interact with it in specific circumstances of everyday life. In that sense, I will trace how robot’s sociality is enacted by being dispersed into the world.

Kate Fletcher’s Craft of Use: Post-Growth Fashion points out how clothes cannot be fully understood in terms of creation, material output, production and ownership.18 Like our clothes (and the rest of the mundane objects that constitute our everyday), new technologies need to be maintained through a sustained attention. To capture this, we must consider the situation of which the technology is part. Being ‹engaging› is not simply a property of new media; these technologies are engaging (or not) in a situation.19 In drawing attention to the use and situatedness of social robots, I take a Wittgensteinian and ethnomethodological perspective.20

As I foreground the situation and use, I do not, however, imply that the design features of these technologies do not matter. Certainly they do; and I will indicate how the specificities of the robot’s design matter interactionally. The thrust of my argument, nevertheless, concerns the participation of that design in larger constellations of interactional moves that constitute specific situations of everyday life. Thus, rather than undermining the importance of what is designed in the robot, I suggest that design of the technology intended for interaction cannot be fully captured without considering how this technology is interacted with.

This is not to suggest a manner in which a social robot should be designed, and with this I do not argue for the importance of iteration in design process. Rather, I am saying that a social robot – its technical materiality and its discursive framing – cannot be realized without its enmeshment in situational contingencies. I experienced social robots existing as social robots only in respect to those acts of attention and maintenance. In other words, the social character of these technologies is fully reliant on their embeddedness in multimodal and sensory interactional enactments as part of specific circumstances of everyday practice. Therefore, I also do not propose any general definition of what sociality is, or provide any criteria for identifying what it consists of, but look at sociality of a robot intended for interaction in specific situations of its engagement and as treated by those who participate in that engagement.

In the concluding section I will say more on the consequences that relationality has for the realization of robot’s sociality. But to indicate how a social robot is actually realized as social robot, I first invite the reader to join an interaction between a robot and a group of humans at a preschool. On that occasion, the preschool, as I will next explain, is a part of a robotics laboratory (so, when I talk about the preschool, I also mean the laboratory). As the following section will describe, the robot there is sustained through the efforts of its designers as well as the preschool inhabitants – children and their teachers. To make these efforts available, however, we need to fine-tune our methods so that we can catch them. We need to sharpen our attention to situational specificities to trace multimodal and sensory detail of interaction that characterizes the encounter. I thus consider how the interacting participants use talk, gesture, gaze, prosody, facial expressions, body orientation and spatial positioning as they engage the material aspects of the setting in which their action is lodged.21 In this sense, by ‹intimacy› I here refer to the phenomenon of human-machine engagement, but also to the modes of attending to it.

At the Preschool / Laboratory

The moments of interaction that I discuss in this section were captured as a part of my ethnographic study – conducted between 2005 and 2013 – of an university laboratory of machine-learning located in the Western United States. The project that I studied followed the principles of the so called iterative design cycle method, where roboticists continually updated the current version of the robot by immersing it in a classroom at the nearby university preschool. The robot is called RUBI, which stands for Robot Using Bayesian Inference, and is a low-cost, child-sized robot designed to function indoors as educational technology. By following the robotics team, I often found myself at the preschool as well22. There, in Classroom 1, a group of 12- to 24-month-old children, together with their teachers and the robot’s designers, engaged the robot.

The humanoid robot featured in this paper (see Figure 3) is equipped with a computer screen and two cameras that stand in for its eyes. The cameras are used to track people who interact with the robot, just like in Wolfson’s animatronics. When involved in tracking human faces, the robot’s head moves. The robot also has a radio-frequency identification (RFID) reader implanted in its right hand to recognize objects handed to it. The interaction with the robot, however, is expected to mainly revolve around its touch-sensitive screen. When in ‹running› mode, the screen displays educational games or a real-time video of the robot’s surroundings, captured through its cameras. By displaying what the cameras record, the robot’s screen allows its interlocutors to see what the robot ‹sees. › Here we are concerned with what the robot sees/senses inasmuch as this ‹looking› and ‹sensing› is interactionally organized, playing a part in the enactment of the robot’s aliveness and sociality. Thus, I propose that the robot is an actor when it is treated as such in a specific interactional setting.

Figure 3: Robot RUBI, featured in the excerpt from interaction

Focusing on interaction is not to forget that the robot’s body concerns cultural modes of present-day significations inscribed in it.23 For example, when building the robot, the researchers’ goal was to construct an inexpensive, easily assembled machine that would quickly appeal to children. To do so, they built the robot’s body themselves: They assembled, mended, took apart and put together the pieces of the robot’s future body as they spent hours of work in what they call the «tinkering room.» The PI’s children and the preschool educators also got involved: While the PI’s six-year-old daughter considered the robot a girl, the educators denominated the robot «Mama RUBI.» In building the version of the robot discussed in this text, the goal for the practitioners was to design a sociable machine that can move around the room. Because this made the robot much larger and heavier, the researchers were worried that the bulkier size made the robot appear threatening. In producing the following model, they opted for a smaller design and gave up on locomotion for the time being. In this paper, as we focus on an excerpt from interaction that illustrates some of the complexities of the robot’s agency as enacted through acts of ‹intimacy›, we keep in mind that these inscriptions of gender, social role and age-markers, woven into the robot’s body, condense cultural worlds as they inscribe historically specific imaginaries into the technology.24

Concerning this designed body, and as we focus on the lived moments at the preschool, we will first notice how the toddlers’ actions indicate the impact that a feature of the robot’s design – a movement – has for the experience of the robot’s agency. At the same time, the excerpt also indicates the situational grounding of that movement. The movement – embedded in a spatial organization of bodies and technologies and the dynamics of the multimodal semiotic interaction – participates in enacting the technological object as an actor. For the robot to ‹come alive› (and finally become the kind of actor the participants may engage as an interlocutor), its designed body is not enough. The robot also needs the acts of attention performed by humans and the involvement of other technologies implicated in that particular moment of interaction, in that specific setting.

The excerpt we will focus on comes from the roboticists’ third visit to the preschool. The toddlers had already become familiar with the robot during the two previous visits. During the third visit, the robot is accompanied by the laboratory’s director or the principal investigator (PI), two graduate students (GS1 and GS2), and the ethnographer (Et) who is the author of this text. As the team enters the preschool, the ethnographer turns on her camcorder, aiming to capture a complex web of gazes and gestures that articulate and are articulated by the activities in Classroom 1.

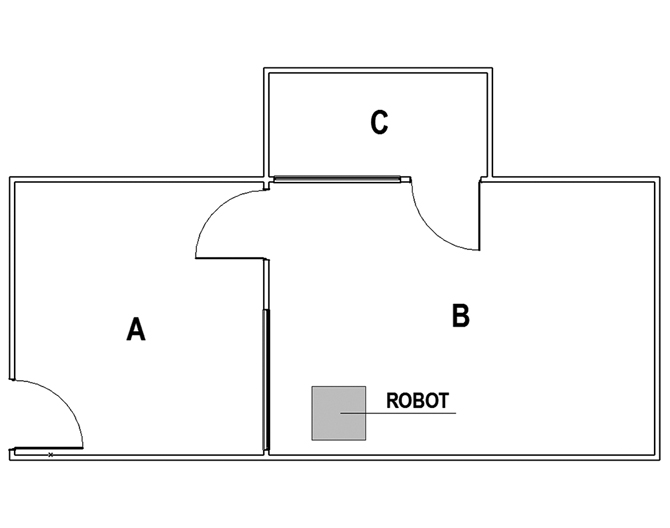

Classroom 1 is a space for two-year-olds to play and learn while their parents are not present. Yet, because the preschool is part of the university, Classroom 1 is also predisposed as a research space. As depicted in Figure 4, the classroom is divided into three areas where the two main areas – Area A and Area B – are connected by a door and a big window. The window allows for a direct monitoring between the two spaces. In addition, there is a small room – Area C – which has a one-way screen opening into Area B. This multifunctional space – both a classroom and an experimental space – is further organized as a setting for research sessions.25 The presence and arrangement of multiple pieces of technology (e.g., the ethnographer’s video camera, the roboticists’ computers and the robot itself), their mingling with the objects that exist in Classroom 1, and the interactional engagements between the research team and the preschool inhabitants articulate the space as a laboratory.26 In that sense, I talk about the preschool as a part of the extended laboratory.

Figure 4: The layout of Classroom 1

Following the team’s arrival to the preschool, the roboticists engage in a ritual preparation of Area B for a research session. The PI asks one of the educators to keep the children busy elsewhere so that they will join the activity only once the scene has been set up. This organizing of the access to the robot’s proximity (so that the robot can be engaged only in some of its sections and instants of the visit) is already an example of those acts of maintenance that participate in performing the robot in a certain manner. In that moment, the robot is what it is in respect to the reinforcement of the line that divides Area A from Area B.

To further stage the scene for the session, first the robot’s computers have to be turned on and connected to an external laptop, then, the room’s furniture needs to be appropriately arranged. Since the robot is not expected to perform any locomotion during the session, the PI places the robot in a corner of Area B, just in front of Area C. As he plugs the robot’s computer into the wall socket, the PI arranges large, colorful cushions around the robot to cover any visual access to the wires, while allowing the children to be comfortably positioned in front of the robot. The labor involved in further arranging the barriers to perception, so that the scene can be prepared as having a «backstage» and «frontstage»,27 organizes the classroom so that certain kinds of actions, through which the robot can be sustained as an agent, can take place. For example, it is almost impossible to stand behind the robot to observe its computers and wires (which would suggest that the robot is a piece of technology rather than a social actor.) Because these elements of the set up may «discredit the impression» of being in a presence of a live social actor, they are «suppressed».28 When toddlers enter the room, they find the familiar environment, namely the playroom that grew into a research setting. There, they are expected to engage with the researchers and the robot as interlocutors. The organization of the cushions, the clearance in front of the robot, and the positioning of other actors who also face the robot make them not only notice the robot, but direct them to its face, hands, and the computer screen. We will see how this front region – where the robot is enacted as an interlocutor – is also managed through the use of gesture, talk, gaze, touch, expressions of emotion and body orientation on the part of the roboticists and other preschool’s inhabitants.

Concurrently, some of the members of the robotics team – together with the computers and wires – serve as back regions for the performance of the robot’s agency. One of them is GS1, a graduate student, who, while her adviser (PI) organizes the space around the robot, positions herself in Area C. GS1’s location allows her to observe the events around the robot through a window that looks like a mirror from the other side. She uses this location to remain invisible to the toddlers’ gaze while directing the robot’s head movements and vocalizations from a laptop. GS1’s work – carefully orchestrated in response to the children’s conduct – is considered by robotics practitioners to be a methodological tool to develop autonomous robots. Even though practitioners regard the operator’s work as necessary only until the robot regains the capacity to act autonomously,29 the operator’s actions are also significant because they – while indicating the knowledge expected to be embedded in the robot’s design – reveal another layer of support provided to the robot’s functioning.

As the team goes through the preparation routine, it encounters a problem: the robot will not run the programs designed for the research sessions, and the robot’s operator has to reboot her laptop a couple of times. To diagnose the issue and coordinate the entire set up, the principal investigator swiftly moves between the operator’s computer (in Area C) and the robot’s body (in Area B) while the ethnographer continues to videotape the scene. On her video, she catches traces of more labor directed toward the robot’s functioning. As the roboticists struggle to turn on the robot’s program, the toddlers – in particular Perry (P) and Tansy (T) – are visibly intrigued by the event. Even though one of the team’s members – GS2 – tries to prevent the toddlers from entering, they don’t give up. Finally, they enter Area B. As the activity around the robot increases, and the adults have to manage the robot’s appearance while they deal with the inopportune presence of the toddlers, it becomes clearer that the transformation of Area B is not under the absolute control of the adults. The toddlers’ efforts involved in trespassing that border-line are also part of how the robot is articulated in that particular moment.

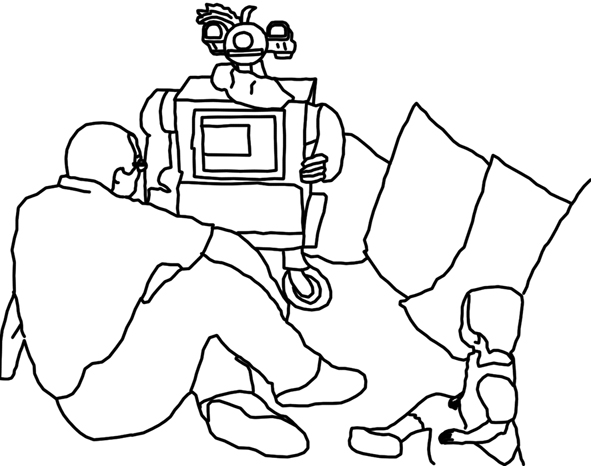

At the beginning of the excerpt, the principal investigator closely monitors the robot’s screen. As he turns around, he notices Tansy seated on the floor in front of the robot. By initiating a conversation with the infant, the principal investigator accepts the arrangement of bodies and technology suggested by Tansy’s presence (Figure 5).30 He seats himself on the floor next to the toddler (as is usually done during the research sessions at the preschool), allowing this transformation of the scene to change the mode in which he engages the robot. He no longer treats the robot as a nonfunctioning thing, but also as an actor. At the same time, the PI and his team continue to prepare the robot for its ‹proper› functioning. In what follows, we will see how this ad hoc management of the front- and back-stage regions is accomplished.

Figure 5: The principal investigator, the robot and Tansy (from left, clockwise)

As we focus on the excerpt from interaction, we join the PI, the toddlers, and the rest of the team as they witness the moment in which the robot starts to function as expected by the robotics team. In describing the scene, we will pay specific attention to how the robot achieves its agency as its movements are grounded in the preschool interaction. We will first notice how the situational grounding makes the robot’s movements publicly available as relevant so that they can then be recognized as meaningful events to be acted upon. Even though the toddlers do not yet exhibit a full linguistic mastery, their interactional capacities are remarkable. Their vocalizations, gestures and facial expressions indicate their co-participation in the robot’s enactment as an actor.

Each line of the transcript (marked by Arabic numerals -1, 2, 3, …) is divided by the participant contributions – human and non-human: PI (Principal Investigator), R (Robot), and the three toddlers – T (Tansy), P (Perry), and J (Joy). The contribution of each participant is further divided in a line of talk which follows the name of the participant, line of gaze – g, and line of hand gesture, where «rh» stands for «right hand» and «lh» stands for «left hand.»

The line of talk is transcribed following Jefferson’s conventions:31

= Equal signs indicate no interval between the end of a prior and start of a next piece of talk.

(0.0) Numbers in brackets indicate elapsed time in tenths of seconds.

(.) A dot in parentheses indicates a brief interval within or between utterances.

::: Colons indicate that the prior syllable is prolonged. The longer the colon row, the longer the prolongation.

- A dash indicates the sharp cut-off of the prior word or sound.

(guess) Parentheses indicate that transcriber is not sure about the words contained therein.

(( )) Double parentheses contain transcriber’s descriptions.

.,? Punctuation markers are used to indicate ‹the usual› intonation.

To transcribe the dynamics of the gaze (the second line), we adopted transcription conventions from Hindmarsh & Heath:32

PI, R, T, P, Te Initials stand for the target of the gaze.

_________ Continuous line indicates the continuity of the gaze direction.

The transcription conventions in the third line are used to depict the hand gesture, and are adopted from Schegloff, and Hindmarsh & Heath:33

p indicates point.

o indicates onset movement that ends up as gesture.

a indicates acme of gesture, or point of maximum extension.

r indicates beginning or retraction of limb involved in gesture.

hm indicates that the limb involved in gesture reaches ‘home position’ or position from which it departed for gesture.

…. Dots indicate extension in time of previously marked action.

,,, Commas indicate that the gesture is moving toward its potential target.

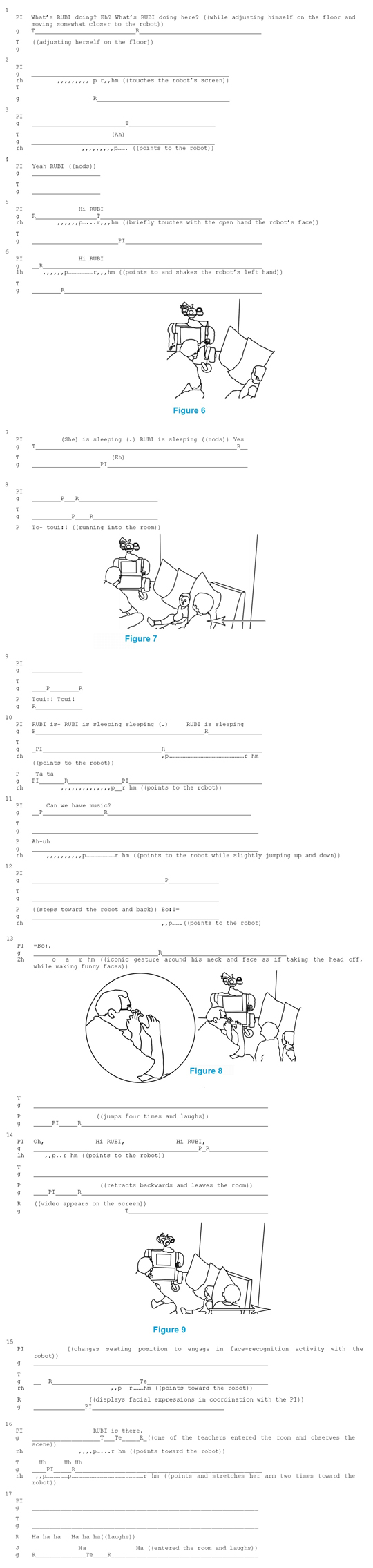

To engage the excerpt, let’s start by considering the movement of the robot that takes place in Line 14, as this can be seen as a moment that organizes the excerpt into a before and an after the robot ‹comes alive›. There, the robot’s head tilts down to then pan across the room. At the same time, its computer starts to display a real-time video of its surroundings. The movement of a robotic machine is customarily considered to be a sign of life: it is a way for the thing to manifest its aliveness. When Errol Morris, in his film Fast, Cheap & Out of Control, introduces Rodney Brooks,34 well-known for his «situated mobile robots»,35 Brooks narrates his autobiographical fascination with computational technologies by anchoring it around a telling of a machinic movement:

«I sometimes ask myself why do I do this. And I trace it back to my childhood days. I used to try to build electronic things […] and I was always trying to build computers […] I just had this tremendous feeling of satisfaction when I switched the thing on, the lights splashed and machine came to life. When I was at MIT building these robots, there was even a more dramatic moment. One night, the physical robot actually moved! I mean, it was one I was working on for days, but it completely surprised me! It moved!»

When Brooks utters «machine came to life» and «it moved», the film frames his face and his eyes bulge out while he smiles mischievously.

In our excerpt from interaction, just after the onset of the robot’s movement, and as illustrated in Figure 9, Perry swiftly but cautiously retracts. Aiming for the door, Perry keeps a close eye on the robot as she moves backwards. She then leaves the room in a hurry. Tansy’s conduct in lines 14-15 is similar: When the robot turns its head toward her, Tansy looks down (line 15). Once she drops her gaze and the robot moves its head away, Tansy looks back at it. Somewhat analogous is Joy’s reaction in line 17: When the robot starts to emanate laughing sounds, Joy laughs back. This comportment on the part of the toddlers tells us that the robot’s physical features are undeniably important for its effects on those who surround it. The excerpt shows how the timing and morphology of the robot’s movement impact the reaction to its presence. As soon as the robot moves, Perry leaves the room while Tansy initiates a ‹looking› action.

But is this all? In other words, can we account for aliveness and the social character of the machine entirely in terms of its physical body and reactions to its movements? In what follows, I will describe how the children’s actions – their subtle gestures, movements across the room, facial expressions, and their not-yet-linguistic vocalizations – materialize the robot’s attraction. But, I will also indicate how they partake in the scene where that movement is collectively oriented to, rendered relevant, and followed by an act of greeting. In other words, I will show how the witnessing of the movement – as a sign of life and as an act that warrants a social response of greeting – is organized through a concerted action and interaction in the preschool classroom. In this sense, Perry’s, Tansy’s and Joy’s actions are not only ‹reactions› to the robot’s movement, but are involved in its situated interactional maintenance.

The linkage between the robot’s movement and the idea of life connects the preschool robot to a series of its historical predecessors, with modern Western automatons – such as the Vaucanson's defecating duck36 – being its exemplary members. In her discussion of animation, Vivian Sobchack points out that the word ‹automaton›:

«[…] was first used in the 1600s to mean both ‹something which has the power of spontaneous motion or self-movement›, and a ‹mechanism having its motive power so concealed that is [it] appears to move spontaneously›; ‹a machine that has within itself the power of motion under conditions fixed for it, but not by it›. As with animation, then, the concept and motive power of the automaton, from the first, turn in on and reverses its meaning – movement the linchpin, but spontaneous agency (or anima) the true sign of ‹life›. Indeed, the initial entry in the OED [Oxford English Dictionary] for ‹automatic›, inaugurally used in the early 1800s, reads: ‹Self-acting, having the power of motion or action within itself›; this again conflating movement and anima through use of the world ‹self›.»37

Sobchack explains that in animation the movement is seen as indicating a self behind it. While automaton was originally also understood as a machine that exhibits movement that only ‹appears› to be propelled ‹spontaneously›, that valence seems to have less force in the contemporary animation with its fixation on the «spontaneous agency» as «the true sign of ‹life›».

In social robotics, the ideas concerning ‹movement› and ‹self› are still at play. The notion of impressions of agency is not foreign to those discourses either. For roboticists, the movement has an undeniable value. Rather than only designing software programs as models for the human mind, they design robots. In doing so, roboticists not only align their enterprise with the broader argument for embodiment38 in cognitive science,39 but, as in our case, generate possibilities for that body to be perceived in a certain way. Modeling the agent’s body as a body that moves means allowing it to generate signs of life. The movements of the robot’s body are to be seen as coming from within, indicating the robot’s autonomy. This, however, gets even more complicated, as what distinguishes the kind of robot encountered at the preschool is its ‹social› character. The preschool robot is designed to be a «socially intelligent robot», and, according to the roboticist Cynthia Breazeal,40 the sociality of those robots is to be linked to their intentional states. In other words, social robots – differently from other robots – are not only to be seen as (being alive and) autonomous but also as intentional. When Breazeal discusses «ingredients of sociable robots», she explains that the robots need to have a «life-like quality».41 This quality is importantly grounded in robots’ movements which humans interpret as self-propelled, seeing the moving agents as autonomous. Breazeal explains that the «life-like human style sociability» is achieved via the human tendency to anthropomorphize those movements.42 By this she means that humans interpret the moving behavior in terms of being governed by internal intentional states, such as beliefs, desires, intents, goals, feelings, etc.

But even if we are talking only about impressions of agency, the discussion rests on two points that are worth noticing. First, when social roboticists want to elicit the human tendency to interpret a moving robot as moving intentionally, they turn to the design of robots’ physical features. The question for them is how to make a robot’s body appear to have beliefs and desires governing its movements. Secondly, when they talk about those interpretations, they presume them as relative to the interiority of a single individual: each of us in isolation and outside of any activity would perceive the robot’s movements not only as indicating life but as being intentional.

The events at the preschool, however, push this phenomenon out of the interiority of both – the robot and its human affiliates. Grounded in acts of intimacy, the intentional body of the machine is done as a multiparty achievement instantiated in the world. In other words, the movements through which the inert matter comes to life (intended also as a social life) concern the robotics team’s visit to the preschool, not only the design of the robot’s body.43 In fact, the more the robot is dispersed into the world, the more it acquires its own agency. Through the doings of the research team, the arrangements of the multiple pieces of technology, the spatial organization of Area B, and the subtle semiotic acts of the preschool’s inhabitants engaging the robot’s body, the robot sleeps and says «hi». Thus, as the children respond to the movements of the robot’s body and further see it as sleeping and greeting, that response is not only about the previously designed body or how each of the participants interprets it through some kind of isolated abstraction, but also about its interactional maintenance in a specific and multiparty situation of everyday practice.

This is, of course, not to imply that chains of careful decisions and ad hoc design solutions, performed and inscribed in the robot’s body before that specific encounter at the preschool, are not highly relevant. In the quote from Errol Morris’ film, Brooks says that the robot that moved was the one he was «working on for days».44 He premises the account by locating the effort during «one night», presumably signaling that the undertaking required extra effort. Brooks then proceeds to describe the robot’s movement, positioning it in an apparent opposition to that work: «It had that magical sort of thing, it worked. And the best part was that it completely surprised me. I’ve forgotten that this physical thing was what I was trying to get to work, and then it happened.» As he provides an explanation for his surprise, Brooks expresses delight («the best part») that he managed to forget his work on the robot. For the robot and its movement to be surprising, and to have «that magical sort of thing», the work on the robot needs to be «forgotten». So, we have, on one hand, Brooks’ highlighting of the human work and the effort put into «this physical thing»: this is, first of all, a man-made machine. On the other hand, he proposes the idea that to be magical and surprising, the machine needs to be perceived as detached from that work so that it can be seen as self-standing and autonomous. Nevertheless, my account of the robot's movement at the preschool maintains that it matters that this work and the efforts that accompany the machine and its movements not be forgotten. Furthermore, it also suggests that they should be tackled as codependent. Rather than seeing the human engagement and the robot’s agential features as two opposing facets, what interests me are the linkages between the two as enacted in the situation of interaction.45 In other words, my aim is to describe how the robot gains what are its movements by being embedded in the situated encounter in the preschool classroom.

And that encounter also includes the work performed during the visit, not only before it. The movements of the robot, for example, directly index the work of the graduate students located behind the mirror in Area C.46 When the robot finally moves, its movements indicate that the graduate students managed to turn on the robot’s program by operating it remotely through their laptop. But the work also takes place in Area B, adjacent to the robot’s body. This can be noticed in Line 11, when the PI says «Can we have music?». In enacting that utterance, the PI not only responds to the presence and conduct of the two toddlers next to him, but guides the graduate students in their work, as he asks them to run an educational game (that plays the children’s song Wheels on the Bus).

As the example of «Can we have music?» already suggests, the effects of the robot’s agency are not only emergent from its movements, humanoid morphology, the inscriptions of cultural markers, and the work of the robotics team, but are also relative to the situated semiotic actions in Area B. To be considered ‹alive›, and even ‹social›, the robot’s movements have to be made publicly available as relevant – the robot’s potential interlocutors need to be able to recognize the robot’s movements as something that they should to pay attention to, already know, can attribute meaning to, and, finally, should act upon. As the excerpt indicates, one of the crucial components of this process is accomplished through the PI’s interactional work. We first see how the PI selects and frames the robot’s features as not only noticeable but particularly significant. He then categorizes the robot’s behaviors or lack thereof in terms of human action. Finally, the PI formulates the toddlers’ actions as performances of the robot’s social character. He, thus, participates in enacting the movements as movements of a social actor. Those movements are then projected to be acted upon, and are acted upon in a manner in which a social actor is normatively expected to be engaged: through a greeting ritual.

Perry’s retracting from Area B (line 14, Figure 9) is preceded by the PI’s high pitched «Oh», uttered as the robot starts to move and its screen switches to video. The PI’s utterance highlights that the change in the robot’s state is supposed to be noticed,47 drawing the group’s attention to it. In contrast, when the PI notices the change in the appearance of the Unix shells on the robot’s computer screen, he frequently touches the screen, but he does not produce any semiotic action that would render such changes more evident. In other words, he treats these kinds of changes as events that are only pertinent to the practical actions of the team, while being semiotically irrelevant, and thus not of interest to the toddlers.

The PI’s semiotic moves also code the robot as an alive,48 human being. In lines 7 and 10, for example, when commenting that the robot is asleep, the PI attributes aliveness to the machine. In a similar vein, in lines 1, 4, 5, 6, and 10, the researcher refers to the technological object by calling it «RUBI», while in lines 5 and 6 he indicates that the object needs to be greeted, as he touches its face and hand. Aug Nishizaka has studied the involvement of touch in interaction to describe how a tactile reference to specific locations is shaped by the action sequences in which it is contingently embedded.49 In line 5, the PI directs Tansy’s attention toward the robot’s face by accomplishing the reference through touch. The touched face is interactionally framed not only as a place to be visually oriented to, but – in combination with the linguistically expressed greeting – as a focal point of interaction. In fact, in line 6 the PI follows by enacting the greeting procedure, whereby uttering «Hi RUBI» and shaking the robot’s hand. This enactment of the greeting ritual, that models the appropriate and expected way of acting and interacting with the machine, constitutes the addressee as a particular kind of entity – a social actor. By greeting the robot, the PI projects an expected action, namely a greeting, performed by a social actor. In this sense, the robot is an actor not only because of the features intrinsic to its body, but also because of its interactional maintenance through the PI’s semiotic actions, performed during the research session.

The achievement of the robot’s social character also involves the participation of the toddlers.50 Toddlers participate through their own actions, but we can also read their involvement in the PI’s conduct. When the PI talks about and treats the robot as a living, social actor, he responds to the toddlers’ presence in Area B and the specific actions that they initiate. When the PI says that the robot is asleep, this is not because the robot’s eyes are closed, or because it is lying in bed. One cannot even say that the robot is asleep because its body fails to exhibit any activity. During the interaction, the robot’s computer screen is in fact turned on, displaying various Unix shells with flickering commands on it. Rather, what puts the robot in a sleeping state is its inappropriate functioning in respect to the presence of the toddlers in the room. The robot is not yet set up to sing, run educational games and track faces, as expected when approached by the toddlers. The practitioners, however, cannot just treat it as any other piece of technology. The ‹sleeping› explanation is an answer to the complexity of the situation to which they are responding. With children too young to understand what death is, ‹sleeping› is often used as a comforting euphemism when they express curiosity toward a dead creature. It seems that the PI relies on this culturally available organization of experience, and uses the sleeping explanation to sustain the animated frame around the robot.51 In presenting the robot as sleeping (rather than non-functioning), the PI also engages in «face-work».52 He «gives face» to the robot as he arranges for it «to take a better line that he might otherwise have been able to take».53 In doing so the PI not only preserves the face of the robot, but also maintains his own face and the face of the rest of his team.54 With an alive piece of technology, the practitioners position themselves as roboticists.55

Immersed in the scene, the toddlers initiate acts of shared attention toward the robot. They cheerfully articulate proto-words and direct deictic gestures toward the robot while they show their monitoring of how the PI’s reacts to their moves. In doing so, they can shape the PI’s actions. In line 3, Tansy points while saying «Ah.» In line 10, both Tansy and Perry point while Perry utters «Ta ta.» In line 11, Perry points again while uttering «Ah-uh.» In lines 15 and 16, Tansy enacts a series of indexical gestures while saying «Uh uh uh» (line 16). As the toddlers manifest their excitement toward the robot, the PI readily responds to their actions. Mardi Kidwell and Don Zimmerman emphasized that the acts of shared attention do not only involve drawing and sustaining other’s attention toward an object,56 as suggested by psychologists57. When immersed in social settings, those acts indicate what another should do in response. A child that shows an object to an adult projects a social action that s/he expects the recipient of the show to accomplish. When Perry and Tansy look at the PI while vocalizing and pointing, the PI treats their actions as ‹shows› to which he diligently responds by identifying and appreciating what they are showing. When Tansy utters «Ah» in line 3, the PI responds (line 4) with «Yes RUBI.» When she says «Eh» in line 7, the PI readily answers with «RUBI is sleeping yes» (line 7). Similarly, in lines 4 and 7, Tansy’s «Uh uh uh» (in line 16) is immediately followed by PI’s «RUBI is there.» And when Perry utters «To-toui:!» in line 8, and «Toui:! Toui!» in line 9, the PI repeats «RUBI is- RUBI is sleeping sleeping (.) RUBI is sleeping» (Line 10). When Perry says «Ah-uh» (line 11), the PI cheerfully follows with «Can we have music?» (line 11).

By responding to what the toddlers are drawing attention to,58 the PI configures the toddlers’ actions in terms of the robot’s agency. He does not only orient to the robot as he would to another object, but makes the toddlers’ actions intelligible by framing them in terms of the robot’s social character. Through the PI’s utterances, the toddlers actively participate in the scene. Their preverbal expressions assume verbal forms so that their actions can be read as addressing the robot as «RUBI», greeting it, asking for music, and talking about the robot’s sleeping state. In other words, through the coordination with the PI’s actions, the toddlers engage the robot, inscribing it with the traits of an animate social actor. Notice also that the PI’s expressions of appreciation often have a form of affirmation. By saying «Yes RUBI» or «RUBI is sleeping yes», the PI assigns the content of «RUBI» or «RUBI is sleeping» to the toddlers’ «Ah» and «Eh», respectively.

A further example of how the PI elaborates toddlers’ utterances – and thus insures that they participate in configuring the robot as an actor – is his participation in line 13. After Perry utters «Bo» in line 12, the PI follows with another «Bo:» in the consecutive line. Instead of attributing an already existing linguistic form to the toddlers’ pre-linguistic utterances, the PI adopts the toddler’s idiosyncratic expression to talk about the robot. Through this uptake, the PI talks through Perry’s words. As soon as he repeats Perry’s «Bo:,» the PI follows his utterance with a gesture that looks like an act of taking off of the head (see Figure 8). Because the PI’s «Bo:» voices Perry’s expression, the PI’s semiotic action further elaborates Perry’s utterance, allowing it to take up the content of the gesture. Through the PI’s gesture, Perry’s «Bo:» is performed as an expression that indicates the robot’s inappropriate functioning.

This framing can be understood as the PI’s manipulation of whatever the toddlers do to make it appear as part of a coherent series of expressions that implicate an animate «other».59 This kind of interpretation, however, would miss some of the essential features of the interaction, as discussed in Alač, Movellan & Tanaka,60 and would disregard how they are part of the ongoing scene in Area B. In the situation where the practitioners, after they found out that the toddlers joined what was originally conceived as a working space, are trying to explain the robot’s lack of proper functioning in terms of its being asleep, while the toddlers are constantly pointing toward what they recognize as important (based on their previous encounters with the team), the PI translates the toddlers’ proto-words and shapes his utterances to toddlers’, so that his semiotic acts fit the common course of action. Perry’s «Bo» and the PI’s further elaboration of the utterance are performed as legitimate moves in the language game61 that configures the robot as an alive, social actor. The functioning of this language game relies on the robot’s bodily movements. However, while the agential effects of those movements are relevant, they are so only by being intimately maintained as a part of the larger, continuously updated, step-by-step articulation of a historically shaped interactional situation. This situation is, thus, not only about the relationship between the robot and a single user/designer, but the presence of all those who partake in the occasion of the encounter. When the PI addresses and talks about the robot, he does so by shaping his words in respect to the presence of the toddlers, by responding to their utterances, and by adopting their expressions.

Conclusion

By paying attention to ‹intimacy›in the human-technology encounter, the interaction with the preschool robot brings two issues to the fore: the problem of labor, and the question of agency. Popular discourses on robotic technologies are often organized around the scenario where robots are seen as displacing humans en masse. As here we deal with social robots specifically, and tackle them from the vantage point of their actual engagement, the landscape of trouble shifts. While social robots are designed to perform the work that is traditionally done by humans (in our example, singing with children and keeping them entertained), the preschool interaction does not indicate a decrease in the quantity of labor and effort involved. My description mostly centered on the work of the roboticists, and teachers were mentioned only tangentially, as helping them get things done (specifically, keeping children away from the robot until it was properly set up). But, it is not difficult to imagine that most of the work which was here performed by the roboticists would need to be done by teachers, once social robots move into their classrooms. To be sure, this work is not only about skills in designing the technology or supporting its proper functioning, but also concerns conversational routines and multimodal and sensory aspects of interaction that the case of a robot aimed at social engagement renders indispensable. What we then see is that labor is now performed in ways that do not map on how the roles are traditionally partitioned and how the work in those settings is usually accounted for. The danger is the potential of its progressive invisibility. As these shifts are not about ‹otherworldly› or ‹futuristic›, but prosaic efforts that are also ‹intimate›, the question is how these forms of commitment can be kept perceptible so that their importance and extent does not get forgotten.

And then, there is the question of agency. In their call for papers, the editors of this special issue talk about new media to say that «[i]ntimacy marks […] the various processes of self-configuration that relate to an increasingly fragile model of identity.» As I considered intimacy in terms of situatedness of the encounter – where the robot is accomplished through interactional engagement and maintenance of those around it – the agency of the robot that emerges does not necessarily match how the West commonly rationalizes about it. That the preschool robot was realized as a part of circumstantial experiences questions the idea that self and agency need to be grounded in the interiority of an individual. When the editors talk about effects of intimacy on self-configuration and «the fragile model of identity», it may be that they do not intend to express a positive stance toward the phenomenon that they indicate. One could, for example, envision the stereotypical scene where robots inhabit futuristic and sterile spaces in which everyone – humans included – looks ‹robot-like›. The story goes then that, as we live with robots, we turn into ‹cold›, ‹cool› and ‹distant› beings. The actual laboratories, however, are neither about being «so swish so smooth» (like imagined in the opening Eddie Izzard quote), nor distant and cool. Instead, there are glitches, effort and engagement. That engagement questions the traditionally conceived boundaries between the self and the world. In this paper, I saw the reconfiguration of agency as something that, rather than disengaging, opens us to the world.62

Recently, roboticists have promoted the idea of robots as co-operators and partners, designing «weaker robot entities».63 For example, in 2014, the US National Science Foundation’s National Robotic Initiative called for projects on «The realization of co-robots acting in direct support of invidious and groups», where the robots would be designed as «working in symbiotic relationship with human partners».64 In the domain of social robotics, Fumihide Tanaka, for example, has worked on «care-receiving robots».65 Intending the technology to be used in educational setting, the project promotes the pedagogical paradigm of «teaching-for-learning» where robots receive instruction or care from children. By looking at the problem of agency from the perspective of everyday situated interaction, this paper, however, argues for something different. While in the scenario of the robot as a «partner» or an «entity to be cared for» the machine is still conceived as an agent in itself, before any engagement with it, what I have observed is about the achievement of agency through interaction, where the agency of the robot is spread across the net. This net does not only concern human-robot couplings, but is about specific situations that regard all those who are present at the scene. The maintenance that I talked about is, furthermore, not done by design, but spontaneously and inevitably.

And this understanding of agency is not only true for robots but for their human interlocutors as well. In the laboratory, the acts of support and tending blur the lines between the robot and those who participate in situations of its enactment. This means that, as the technology is supported through human action and interaction, so are humans figured through those encounters. In other words, these engagements, that further question the boundaries between what is biological and what is synthetic, allow us to witness the dynamicity of human roles.

How does this relate to the violence brought up in the introductory section? In other words, how does the interactionally distributed agency speak to the frustrations, refusals to engage and violent responses seen in my class’ assignment and Furby’s appearances on YouTube, further spectacularized at David Zwirner Gallery? I did not actually participate in those human-machine encounters, but by considering what was transcribed, reported and captured in photographs and Youtube videos, those cases suggest that the idea of the human exceptionalism (how do these machines dare to question it?), as well as the deep-seated notion of agency as pertaining to the interiority of a single individual are at play. When my students had to converse with a chatterbot, they positioned it as an agent in itself, presuming its complete autonomy and self-sufficiency. But, divorced from the rest of its interactional support, the bot disappointed. It was then scolded, abused, and tortured.

In the laboratory, on the other hand, the PI knew that the robot was not sleeping and that it was not greeting the children. Nevertheless, in that situation, he had to enact it as such. This engagement was not simply about his beliefs or willingness to provide support to the machine, but about the dynamics of the situation in that multi-party setting. The PI responded to what took place around him: while having to make the robot work, he discovered that one of the toddlers made her way in the room and took a seat next to him. Despite the rationalized beliefs that the PI may have about it, in that situation, the agency of the robot (and that of the PI) cannot be fully explained in terms of an individual interiority. As the encounter at the preschool unfolded, it made sense for the PI to participate in and constantly reenact the robot as an interlocutor; and there, really, was no other way around it.

What about Wolfson’s case? Wolfson’s work is interesting as it thematizes that violence seen in my classroom and on YouTube. Judging from what I experienced in the robotics laboratory, it is plausible that in making his sculptures and collaborating with engineers and technicians «at a Hollywood animatronics lab that specializes in creature effects and building out robotics models for movies»,66 the artist was involved in providing support and attentively engaging his humanoids. From the ArtNews article, we also learned that he asked a visitor to do that as well, when he directed the critic to a certain spot in the gallery from where the robot can appear to lock its gaze with him. But, at the same time, in his animatronics installations, Wolfson – while positioning his sculptures as self-standing agents – spectacularizes the violent impulses and disturbed responses people have to humanoid technologies. This time, rather than directly participating in the abuse, gallery goes can witness the violence.

We tend to be intrigued by new technologies that are getting «so swish, so smooth» promising seamless interactions that would render them invisible. We also seem to derive pleasure from seeing these human-like machines being abused.67 Yet, the mundane face of the phenomenon – the one of the situated support – is also there. In this text, I turned to it, and, while providing an example of how – through «intimate methods» – we could get at the acts of accommodation, I traced the enactment of agency through its dispersion into situational details of everyday practice.

In an article for ArtnetNews, Ben Davis68 – who defines Wolfson’s stance as «post-meaning, post-critical, non-judgmental» (as he compares his work to Jeff Koons’) – quotes Wolfson saying:

«‹The intention is a positive one – all the works have very positive intentions behind them›, Wolfson says. ‹They're not about hatred and they don't propagate hatred. I think they're about witnessing culture. It's like, I consider myself a witness to culture and not a judge.›»69

Our present cultural moment may (or may not) be about hatred and violence, as epitomized in our relationship with social robots or similar humanoid technologies. By taking up the frame of intimacy, my intention here was not to propose kindness toward these objects, or generate a solution for design of more apt robots for social interaction. Instead, what I described was intended to get at the idea of self and agency that does epitomize our current cultural moment. The burst of violent behaviors toward humanoids are nothing else but a testament to it.

Acknowledgments: I would like to thank Sarah Klein, Maurizio Marchetti, Dori Morini, Javier Movellan, the participants in the ethnographic study, and the editors of the special issue of the Zeitschrift für Medienwissenschaft for their contribution to this study.

- 1 The recording can be retrieved on YouTube, e.g. «Eddie Izzard’s Encore on Computers», www.youtube.com/watch?v=k6C_HjWr3Nk&list=PL27B8C3609EDE59BB, last viewed 4 June 2016.

- 2 E.g. Cynthia Breazeal: Designing Sociable Robots, Cambridge, Mass. 2002.

- 3 E.g. Michael Lynch: Art and Artifact in Laboratory Science. A Study of Shop Work and Shop Talk in Research Laboratory, London, 1985.

- 4 Bruno Latour: Science in Action. How to Follow Scientists and Engineers through Society, Cambridge, Mass. 1988.

- 5 E.g. Bruno Latour: Where are the Missing Masses. The Sociology of the Few Mundane Artifacts, in: Wiebe Bijker, John Law (eds.): Shaping Technology-Building Society. Studies in Sociotechnical Change, Cambridge, Mass. 1992, 225–258.

- 6Natalie Jeremijenko: If Things Can Talk, What Do They Say? If We Can Talk To Things, What Do We Say?, last updated 5 March 2005, www.electronicbookreview.com/thread/firstperson/voicechip, last viewed 17 September 2016.

- 7 An article on Phaidon’s website states: «It can be tricky picking out the masterpieces in contemporary art, yet Jordan Wolfson’s […] animatronic work […] is surely one of those in contention.» Unknown author: Have You Seen Jordan Wolfson’s New Animatronic?, last updated 28. April 2016, de.phaidon.com/agenda/art/articles/2016/april/28/have-you-seen-jordan-wolfsons-new-animatronic/, last viewed 17 September 2016.

- 8 Nate Freeman: The Man-Machine: Jordan Wolfson on his Giant New Robot, Hung by Chains at Zwirner, last updated 9 May 2016, www.artnews.com/2016/05/09/the-man-machine-jordan-wolfson-on-his-giant-new-robot-hung-by-chains-at-zwirner/, last viewed 17 September 2016.

- 9 Jordan Wolfson presented at David Zwirner Gallery can be found at www.davidzwirner.com/exhibition/jordan-wolfson-5/, last viewed 4 June 2016.

- 10 Andrew Goldstein, Jordan Wolfson on Transforming the «Pollution of Pop Culture Into Art», last updated 10 April 2014, www.artspace.com/magazine/interviews_features/qa/jordan_wolfson_interview-52204, last viewed 17 September 2016.

- 11 Nate Freeman, The Man-Machine.

- 12Ibid.

- 13 On one occasion, I observed a staging of violence toward a robot by a researcher who showed to me that, differently from the visitors who just toured the laboratory and marveled in front of the robot, he is not emotionally attached to it. In this regard, it is also interesting that in the interview with Wolfson, the comment about the violence of his piece reported above, is followed by the following: «‹It was about me as an artist relating to the object›, Wolfson added. ‹It’s hard for me to feel anything because I made it.›», ibid.

- 14 Vivian Sobchack: Animation and automation, or, the incredible effortfulness of being, in: Screen, Vol. 50, No. 4, 2009, 375–391.

- 15 Lucy Suchman: Subject Objects, in: Feminist Theory, Vol. 12, No. 2, 2011, 119.

- 16 Nate Freeman, The Man-Machine.

- 17 Even if the term ‹intimacy› shares some of its imperfections with another related and trendy term – ‹care›, in respect to the contrast to a ‹distant› relationship, the term ‹intimate› is preferred here.

- 18Kate Fletcher: Craft of Use. Post-Growth Fashion, London 2006. See also the website: craftofuse.org.

- 19 By situation I don’t only mean the present moment in time, but its nesting in the chain of encounters that precede it and those toward which the present moment projects.

- 20See Ludwig Wittgenstein: Philosophical Investigations, Oxford 2001 [1953] and e.g.: Harold Garfinkel: Studies in Ethnomethodology, Cambridge, UK 1985; Michael Lynch: Art and Artifact in Laboratory Science, 1985; Lucy Suchman: Plans and Situated Actions. The Problem of Human-Machine Communication, New York 1987.

- 21 See e.g., Charles Goodwin: Professional Vision, in: American Anthropologist, Vol. 96, No. 3, 1994, 606–633; Christian Heath, Jon Hindmarsh: Analyzing Interaction Video Ethnography and Situated Conduct, in: Tim May (ed.), Qualitative Research in Action, London 2002, 99–121.

- 22Since this process of design and construction is meant to respond, at least in part, to the preschool visits, its contingencies do not only concern the work of the roboticists but also the classroom’s interactions between the children and their teachers.

- 23Roland Barthes: Mythologies, London 1972 [1957].

- 24 Claudia Castaneda, Lucy Suchman: Robot Visions, in: Social Studies of Science, Vol. 44, No. 3, 2014; see also Jennifer Robertson, Gendering Humanoid Robots. Robo-Sexism in Japan, in: Body & Society, Vol. 16, No. 2, 2010, 1–36.

- 25 Setting for activity is repeatedly experienced, personally ordered and edited version of a more durable and physically, economically, socially organized space-in-time, see Jean Lave, Michael Murtaugh, Olivia de la Rocha: The Dialectic of Arithmetic in Grocery Shopping, in: Barbara Rogoff, Jean Lave (eds.), Everyday Cognition, Cambridge, Mass. 1984, 71.

- 26Michael Lynch: Art and Artifact in Laboratory Science, 1985, 13.

- 27 Erving Goffman: The Presentation of Self in Everyday Life, New York 1959, 22, 106, 112; see also Lucy Suchman: Human-Machine Reconfigurations. Plans and Situated Actions, New York 2007, 246.

- 28 Goffman: The Presentation of Self in Everyday Life, 111.

- 29 Fumihide Tanaka, Aaron Cicourel, Javier Movellan, Socialization between Toddlers and Robots at an Early Childhood Education Center, in: Proceeedings of the National Academy of Science, Vol. 194, No. 46, 2007, 17954–17958.

- 30 As exemplified by Figure 5, in the rest of the paper, I will use line drawings derived from still photographs (retrieved from the video), based on conventions for transcribing mulitmodal interaction by Charles Goodwin: Practices of Seeing, Visual Analysis. An Ethnomethodological Approach, in: Theo van Leeuwen, Carey Jewitt (eds.): Handbook of Visual Analysis, London 2000, 157-182.

- 31Gayle Jefferson: Glossary of Transcript Symbols with an Introduction, in: Gene H. Lerner: Conversation Analysis. Studies From the First Generation, New York 2004, 13–31.

- 32 Jon Hindmarsh, Christian Heath: Embodied Reference. A Study of Deixis in Workplace Interaction, in: Journal of Pragmatics, Vol. 32, 2000, 1855–1878.

- 33Emanuel Schegloff: On some Gesture’s Relation to Talk, in: J. Maxwell Atkinson, John Heritage (eds.): Structures of Social Action. Studies in Conversation Analysis, Cambridge 1984; and Hindmarsh, Heath: Embodied Reference.

- 34Fast, Cheap & Out of Control by Errol Morris, USA 1997, Scene V, «Rodney Brooks».

- 35Rodney Brooks: Cambrian Intelligence. The Early History of the New AI, Cambridge, Mass. 1999.

- 36Jessica Riskin: The defecating duck. Or: The Ambigiuous Origins of Artificial Life, in: Critical Inquiry, Vol. 29, No. 4, 2003, 599–633.

- 37Vivian Sobchack: Animation and automation, 383.

- 38Robots as models in cognitive science are part of a larger discursive turn that promotes the idea that the human mind cannot be understood without taking in account the organism’s perceptual and motor systems. Thus, it is said that an artificial intelligence can be achieved only if sensory and motor skills are also modeled (e.g., Brooks: Cambrian Intelligence).

- 39E.g. Andy Clark: An Embodied Cognitive Science, in: Trends in Cognitive Science, Vol. 3, No. 9, 1999, 345– 351.

- 40Breazeal: Designing Sociable Robots.

- 41 Ibid, 8.

- 42 Ibid.

- 43 What is more, they cannot even be specified in the design (see Suchman: Plans and Situated Actions).

- 44 Brooks: Cambrian Intelligence.

- 45 See also Morana Alač: Moving Android. On Social Robots and Body-in-Interaction, in: Social Studies of science, Vol. 39, No. 4, 2009, 491– 528.

- 46 In Alač: Moving Android, I looked at how a robot’s movement is designed in the laboratory. Here, this attention to the engineering work is visible in respect to the graduate students’ effort to make the robot move and the PI’s guidance of that work. We see how the engineering and design work is articulated in an ‹extended› laboratory.

- 47 Goodwin: Professional Vision.

- 48Ibid.

- 49Aug Nishizaka: Hand Touching Hand. Referential Practice at a Japanese Midwife House, in: Human Studies, Vol. 30, No. 3, 2007, 199–217.